Cristina Dobre

selection of projects, talks, awards

IVA22

September 2022

I’m back at IVA22 presenting the work: More than buttons on controllers: engaging social interactions in narrative VR games through social attitudes detection. It highlights the industry-academia collaboration focusing on the contributions of building agents for the games industry and showcasing work’s benefits in the industry overall. So if you’re there, swing by on Thursday 8th Sept!

I’m also presenting my PhD work overall as a Doctoral Consortium paper on Tuesday 6th of Sept. It covers the research done so far in my PhD, very much like a synopsis of my thesis.

The papers will be available soon!

Develop22 in Brighton

July 2022

My research on implicit and engaging social interactions through social attitudes detection was showcased as a short video part of Prof. Peter Cowling’s talk at Develop Conference. It was a great experience to be part of the largest games conference in the UK!

LBW at CHI2022

April 2022

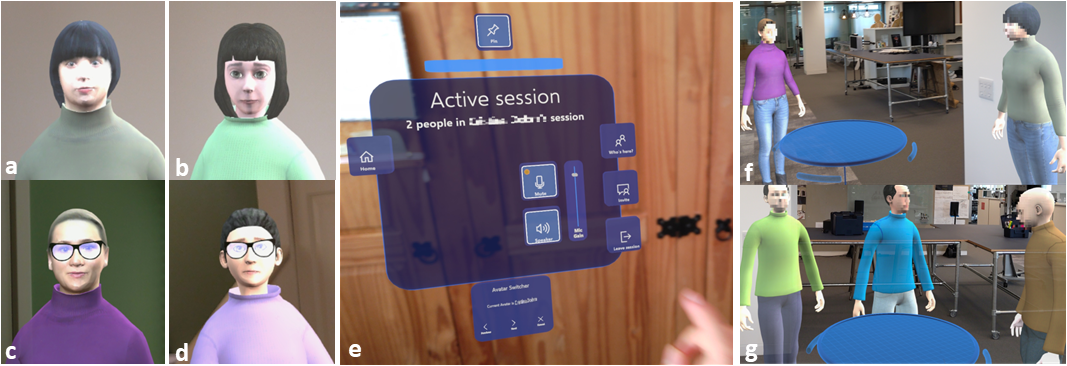

An initial research outcome from the summer 2021 research project with MSR Cambridge is going to be published at CHI2022! The article is entitled: Nice is Different than Good: Longitudinal Communicative Effects of Realistic and Cartoon Avatars in Real Mixed Reality Work, and was presented in April at CHI22. Check out the preview video and the presentation video.

Journal article at Springer VR

March 2022

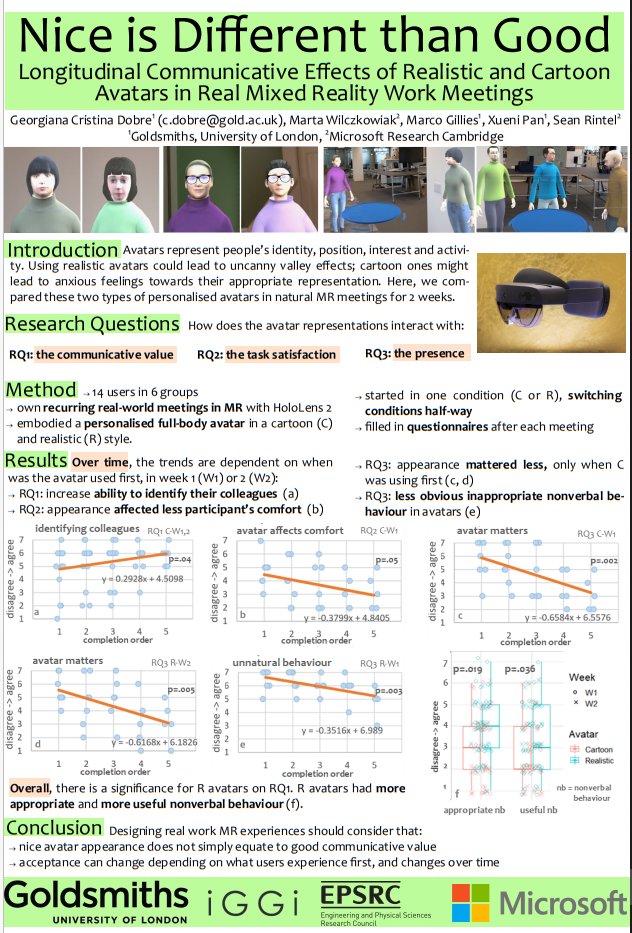

The research outcome that started with my first internship in 2019 is officially out! We investigated an immersive ML for social attitude detection in VR narrative games.

The work is published with Springer Virtual Reality, and the full paper can be found here: Immersive machine learning for social attitude detection in virtual reality narrative games

Special thanks to my supervisors and co-authors Sylvia Pan and Marco Gillies, to the industry collaborators: Dream Reality Interactive and Maze Theory. The teaser video is upcoming!

ICMI 2021

October 2021

I presented my work at the International Conference on Multimodal Interaction (ICMI) on the gaze dynamics in dyads during an unstructured conversation using multimodal data. This work was in collaboration with Antonia Hamilton’s Lab who kindly provided me with the data.

The main results were: (1) Listeners look more at the other person than speakers.(2): When looked at, people switch between direct gaze and avert gaze with a higher frequency compared to when they are not looked at.(3): When not looked at people look more at the other person’s face (direct gaze) than somewhere else (avert gaze).

You can read the full paper here: Direct Gaze Triggers Higher Frequency of Gaze Change: An Automatic Analysis of Dyads in Unstructured Conversation

Internship at Microsoft Research Cambridge

Summer 2021

Over the summer of 2021, I had the opportunity to meet and work alongside a super talented and ambitious group of people from Microsoft Research Cambridge. For four months we cooked up a cutting-edge research project that would impact and feed into the next developments of collaboration in immersive environments (VR/MR).

I was part of the Future of Work group, supervised by Sean Rintel. I was very closely collaborating with Marta Wilczkowiak from the HoloLens group. The aim of the project was to understand how the looks of avatars impact the interaction in virtual environments. The current work in this area is not conclusive and there’s no literature from longitudinal studies.

Thus, we rolled up our sleeves and designed a study comparing two types of personalised, full-body avatars. We considered cartoon-like and realistic avatars, all participants having their own avatars (in two styles!). Participants would run their usual, recurrent meetings every day for two weeks swapping the avatars halfway through. All meetings were in Mixed Reality (MR), using the HoloLens 2 device.

After this design period, the more hands-on part started. It involved implementing the MR environment for the networked meeting, creating a workflow for animating full-body avatars, building a telemetry system, recruiting participants from different departments and time zones. I screened 30+ participants out of which we recruited 14 (7 female, 6 male, 1 non-binary) forming 6 groups. Each participant was using a HoloLens 2 device and could see and interact in real-time with their colleagues’ full-body avatar.

This project was such an enriching experience! My PhD research was mostly focused on VR, hence I got first-hand experience with MR applications/devices and the differences from the VR ones. I would love to work on a project combining both VR and MR mediums!

This was also my first longitudinal study. I have got an even clearer understanding of the benefits of prolonged exposure in the area of immersive virtual environments (MR/VR). Going forward, I am keen on designing more longitudinal research studies and I’ll appreciate much more the published longitudinal research out there!

Workshop at IVA2020

October 2020

The 20th International Conference on Intelligent Virtual Agents (IVA) was supposed to take place in Glasgow, but like all other conferences this year, it happened online. We presented there a workshop on Immersive Interaction design for Intelligent Virtual Agents. It looked at how approaches to interaction design for body movement can be applied to interaction with IVAs, in particular to non-verbal communication with virtual agents. It was a hands-on workshop where Nicola Plant and Clarice Hilton introduced a new interactive machine learning tool, InteractML. Those who attended used their own body movements to explore new ways of interacting with virtual agents and discovered InteractML, that allowed for rapid implementation of these designs.

The organisers of IVA chose Gather.Town platform to host the event. From my perspective, it went really well. I enjoyed being able to move so that I can talk to people and to make sure to be in the auditorium on time for the main talk sessions. They also had Salons where you’d stay at the same table with others and have a discussion driven by a senior member of the community. I enjoyed these the most and I think they brought a bit of that networking feeling from being at a in-person conference.

Here I also got to meet Hannes Högni Vilhjálmsson whose research is very interesting and diverse; Along with other organisers, he worked hard to make sure the conference went smoothly and also organised a VR afterparty with a Halloween theme.

Internship at Microsoft Research with Jaron Lanier

June 2020

I was very excited to have the chance to work as an intern with Jaron Lanier at the Office of the CTO at Microsoft Research. Along with M Eifler and others in the lab, we were supposed to work on a very interesting project that I probably can’t say much about. Due to the COVID-19 pandemic, my internship had to be postponed to summer 2021. Unfortunately, later in the year, Microsoft Research decided to cancel the offered internships of those not residing in the US or Canada. For as much I would have enjoyed and learned during this internship, it couldn’t happen at these challenging times. Hopefully, there will be a way to cross paths and work together with Jaron Lanier at some point in the future.

Award: Google Women Techmakers Scholarship

April 2020

I was so happy to find out that I was awarded the Google Women Techmakers Scholarship. Unfortunately, the trip to the US and the workshop were cancelled due to COVID-19 pandemic. There we would have met all other scholars and we would have had workshops and networking events. We had instead a very nicely organised online workshop where we met most of the scholars and we’ve got a very interesting talk from Jade Raymond, VP and Head of Stadia Games and Entertainment.

Webinar at Immersive UK

April 2020

Together with Sylvia Pan and Marco Gillies, we presented some of our work on AI-driven characters for virtual environments. The webinar scratched the surface on how we can develop and drive virtual characters in interactions in VR, based on what we know from an academic and industry perspective.

Award: Rabin Ezra Scholarship

March 2020

I was awarded the Rabin Ezra Scholarship which supports junior researchers. The Trust awards scholarships each year and they usually have the deadline at the beginning of December.

Winner of VIVE Dev Jam

January 2020

Our Pinky Mega team 4 (very spontaneous name, I know) won the first prize at the VIVE developer Jam.

The team was formed of Carlos Gonzalez Diaz, Claire Wu, Lili Eva Bartha and myself and we put together an active listening project where the user would drive the interaction (and the discourse) using their implicit behaviour.

It was a lot of fun but also long hours of work. We were very sleep deprived after the whole weekend but happy that we got the chance to meet new people and to play with some pretty cool new toys such as the lip tracker from VIVE. Goldsmiths University of London wrote a blog post about us and other Goldsmiths students who attended the Jam.

Speaker at the AI and Character Driven Immersive Experiences Workshop

December 2019

I ended 2019 with a workshop on how immersive experiences can be driven by AI and virtual characters. As soon as I finished my internship, I started helping plan this workshop with Sylvia Pan and Marco Gillies. It was an even that showed work from the academia (such as the work of Prof Anthony Steed or Prof Antonia Hamilton) but also from the industry world (Royal Shakespeare Company, or HTC VIVE).

I also had the chance to give a full presentation (and a demo) on the work I’ve done during my internship. It included the things I’ve learned and the AI tool we’ve developed. More on this to come, hopefully as a more polished project and with the published results.

Poster and DC at ACII19

September 2019

I’ve been following the research presented at ACII (International Conference on Affective Computing & Intelligent Interaction) since the start of my research journey. I’m so happy to have the chance to present some of my preliminary work as a doctoral consortium paper and poster.

I have to say, I was very nervous about presenting it, but after that, I had lots of fun attending the rest of the conference. I could see in person some of the researchers I follow a lot such as Catherine Pelachaud, Stacy Marsella, Jonathan Gratch, Rosalind Picard, Daniel McDuff and many others.

There were three keynote speakers Simon Baron-Cohen, Lisa Feldman Barrett and Thomas Ploetz. All three were very insightful, however, Lisa Feldman Barrett gave a very on-point presentation on the machines perceiving emotions. The keynote recording is on YouTube; do have a look if you’re interested in this subject. After the conference, I read her book How Emotions are Made which was a very very good and easy-to-follow book.

Imitation Learning Workshop

September 2019

Along with Carlos Gonzalez Diaz, we organised a workshop for the annual Intelligent Games & Game Intelligence (IGGI) Conference in York, UK. We introduced the Unity3D ML-Agents platform, the algorithms that can be used within the framework with a focus on the imitation learning one: Behaviour Cloning. The attendees went through a tutorial to build a game by setting-up the ML-Agents framework, training it using Tensorflow and testing it back in the game engine.

If you’re interested, you can have a look at the slides presented and at the github repo used in the workshop.

Doctoral Research Intern

Summer-Autumn 2019

Collaborating with two games studios (Dream Reality Interactive and Maze Theory) and with the academic support from Goldsmiths UoL, we created an immersive ML pipeline for Virtual Reality experiences, project funded by Innovate UK.

The work was based on reinforcement learning and imitation learning concepts using the Unity ML-Agents platform. The ML pipeline we built detects a social attitude (such as sympathy, aggression or social engagement) during an interaction in VR between the player and a non-player character. Together we conceptualised the pipeline, planned the study design, built the environment for data collection, trained & evaluated the model and part-integrated the pipeline in the final game product.

Overall, this work introduces an immersive ML pipeline for detecting social attitudes and demonstrates how creatives could use ML and VR to expand their ability to design more engaging experiences. Here, Goldsmiths wrote a news article about it.

Teaching 3D Virtual Environments and Animation

Spring Term 2019

This spring term I was an associate lecturer for the VR module in Goldsmiths. Along with Leshao Zhang, I took care of the labs, while Sylvia Pan was running the lectures. I was teaching mostly basics of Unity3D for VR applications and helping students with VR projects (just imagine lots of brainstorming, troubleshooting, code debugging and testing cool(!!) VR games and applications). They came up with really cool projects, there will be a blogpost about this soon! For now have a short list of some of them: Zombie Game, Furniture assembly, Bee Simulator(short video below), Stress-relieve smashing game, Water Meditation and Immersive 3D creation and visualisation tool. Marco Gillies wrote a more in-depth blog post on Medium about it.

I hope the students benefited a lot from this module, it was very interesting for me too. It was also my first teaching experience in a university environment- nothing like teaching kids creative coding in afterschool clubs!! A few weeks ago it had a guest speaker too -Francesco Giordana from Moving Picture Company. It was incredible to see that they are using VR for film making; He described something called Virtual Production which lets previewing Computer-Generated (CG) elements, in real-time on a film set. It also enables the filmmakers to scout the environment, capture, add animations iterate through and shoot with virtual cameras - everything within the virtual environment! They had projects where all of this was done remotely too- with people from around the world.

The 3D Virtual Environments and Animation module will run again next year- I look forward to being part of this again. There’s also a brand new MA/MSc Virtual and Augmented Reality that starts next September as well, if it sounds like your thing!

Virtual Social Interaction

December 2018

The 4th Workshop on Virtual Social Interaction took place on 17-18 Decembre 2018 at Goldsmiths UoL. I’m glad I had the chance to attend and help organise this event.

Marco Gilles wrote a nice overview of the event- have a look at his VSI18 blogpost. It was indeed, such an interesting workshop across both days, featuring all female keynote speakers, a range of inspiring talks and a buzzing poster session.

If you joined the workshop and want to stay in touch (or if you want to hear updates about it + other related news) join our VSI Google Group!

Oh, and stay tuned for VSI2020!!

W|E

August 2018

In August 2018 I took part in this amazing one-week Signal & Noise workshop organised by the MIT Media Lab in Berlin. The track I was part of was VR/AR-based learning experience led by Scott Greenwald.

We created, indeed, a learning experience about the Berlin Wall based on first-hand escape stories. Media Lab created this video to showcase the behind-the-scenes process of our project and Die Welt wrote this article about our work! One week was enough to prototype this, but we want to put together a final product, therefore this is an on-going project now- how exciting! Find out more about the project and the (great!) people working on it on W|E website and follow us on Twitter to see what we are up to!

VR Escape Room experience

May 2018

This is a game prototype for an escaperoom/puzzle-like experience in 6DoF VR. The aim is to exit the room by opening the main door. The main door is initially locked (shown by a red door). To unlock it, the user needs to solve a puzzle. It can be solved by finding the right objects in the room and place them on the table next to their ‘trace’. The shapes on the table and the clue on the screen should help the player pick the right objects and solve the puzzle. When the right objects are placed on the table, the door gets unlocked (its colour turns green) and the player can open it and exit the room. Here’s a short overview of how the room looks like.

This prototype was created by myself in Unity 3D using Oculus SDK and VRTK for the Game Development module (14/05/18 - 25/05/18).

GGJ Game: QT-Labs

Jan 2018

This is a VR game created in Unity3D over a weekend during the 2018 Global Game Jam (Queen Mary UoL site). The team had six members: one sound effect & music member, two 3D and 2D artists who created and designed the game assets and three developers. I was one of the three developers, having the role of implementing the game logic and testing the game. Here’s our porject overview on GGJ website and here’s a short gameplay.

StarWing Genetica

Nov 2017

This is a game developed in Unity over the course of a 2-weeks Game Development module in Goldsmiths UoL. StarWing Genetica is a one player space-themed shooter game, partly inspired by classic arcade games. In this game, a genetic algorithm is used to evolve better enemy agents over time. Here’s a short gameplay.

It was designed and built by a team of three: Charlie Ringer, Valerio Bonametti, and myself.

So what now? Maybe check out what I’m doing now or see some basic stuff about me!